I tried to get clever with my Notion setup last week. The idea was simple: let an AI agent do the grunt work of keeping my Notion table fresh with industry news. On paper, it looked elegant. In practice? It broke almost immediately.

Here’s what happened when I let AI agents take over my Notion — and why the result was nothing like I expected.

The Experiment: AI Agents Meet Notion

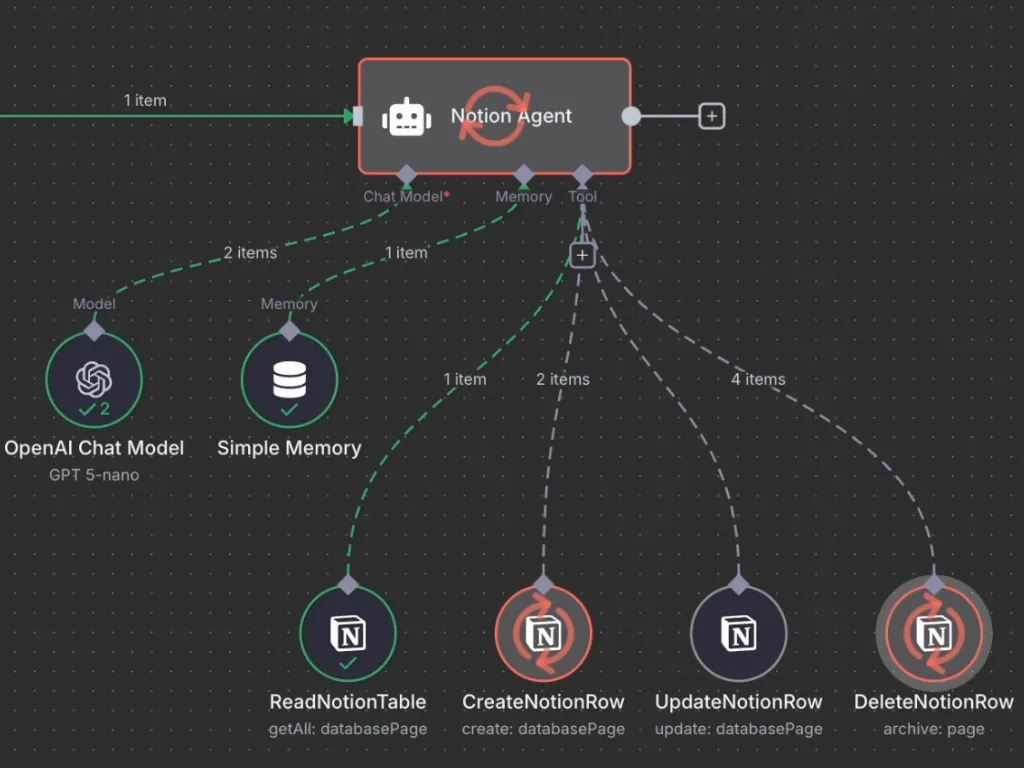

I set up two agents:

- Master Agent — collects fresh news from the internet and ranks it by relevance to my business.

- Curator Agent — gets CRUD tools (create, read, update, delete) to push those updates into my Notion database.

The goal: every day, my Notion table would quietly update itself with new articles, complete with links, timestamps, and relevance scores.

Sounds like the dream, right?

The Reality Check

Instead, all I got were bare article titles.

No links.

No timestamps.

No relevance scores.

It was like asking someone to take notes for you and getting back a grocery list.

When I dug into the logs, the problem became obvious: the agent didn’t actually know how to use the tools I’d given it. The CRUD operations were there, but the AI treated them like half-understood instructions — fumbling, skipping steps, and leaving most of the data behind.

That’s when it hit me: this isn’t just a Notion problem. It’s the bigger issue with today’s AI automation. Unless you handhold the model through every detail, it will happily “wing it” — and winging it is a terrible strategy for reliable workflows.

Enter MCP: A Contract for AI Agents

This is where Model Context Protocol (MCP) changes the game.

Instead of hoping the AI interprets your instructions correctly, MCP acts like a formal contract. It’s closer to an API spec than a prompt.

- It defines the tools available.

- It describes the inputs and outputs.

- It tells the model exactly what’s required to succeed.

If my Curator Agent had been using MCP, it wouldn’t have guessed at how to update Notion. It would have known it had to call createPage with the link, timestamp, and relevance score. No improvisation, no skipped steps.

But There’s a Tradeoff

Even with MCP, another problem rears its head: cost and speed.

The workflow took about 90 seconds to finish. That’s forever when you’re waiting for a single update. And the token bill? Let’s just say I could feel it burning a hole in the API budget.

That’s the tension every founder and tinkerer runs into:

- Convenience-first: Let agents orchestrate everything. It feels magical, but it’s slow and expensive.

- Efficiency-first: Strip out the LLM where possible, use direct automation tools, and keep it lean. Less “magic,” but cheaper and faster.

When to Use AI Agents in Notion

If your use case is light — curating daily content, logging mentions, testing ideas — AI agents can work fine, especially with MCP to keep them on track.

But if you care about real-time updates or predictable costs, you’re better off leaning on classic automation flows in n8n, Make.com, or Zapier. They don’t need magic to be reliable.

Takeaway

My Notion table didn’t turn into the self-updating knowledge base I imagined. But the experiment made one thing clear:

👉 Without protocols like MCP, AI agents may always be unreliable.

👉 With MCP, they finally behave — but you still pay the price in tokens and time.

The future of AI automation in Notion won’t be about clever prompts. It’ll be about balancing reliability, speed, and cost — and knowing when to let an agent take the wheel versus when to build a lean, traditional workflow.

Need help fixing your AI-coded setup or making agents actually work in production? Let’s talk.